Abstract

Background:

This study was conducted to evaluate Pharmacy Information Systems (PIS), which are widely used in Iranian hospitals to identify the usability problems of PIS.Methods:

Ten usability experts independently evaluated the user interfaces of pharmacy information system using a heuristic evaluation method. They applied Nielsen’s heuristics to identify and classify usability problems and Nielsen’s severity rating to judge their severity.Results:

Overall, 125 unique heuristic violations were identified as usability problems. In terms of severity, 67% of problems were rated as major and catastrophic. In terms of usability violations, two out of 10 heuristics including “consistency and standards” and “recognition rather than recall”, were the most frequently violated whereas “error prevention” and “help users recognize, diagnose, and recover from errors” were the least frequently violated heuristics.Conclusions:

Despite widespread use of specific healthcare information systems, they still suffer from usability problems. These usability problems have been found to potentially put patients at risk. Moreover, they can negatively affect the effectiveness and efficiency of the healthcare information systems, the satisfaction of their users, and the financial issues of the hospital. It is recommended that the designers design systems on the basis of existing standards and principles. These systems are required to be evaluated annually and updated based on obtained results.Keywords

Evaluation Study Pharmacy Information System User-Computer Interface Usability Evaluation

1. Background

Studies have shown that medical errors account for a mortality rate of 44,000 - 98,000 in hospitals and also that medical errors cost up to $29 billion for a state economy (1, 2). Nowadays, pharmacy information systems (PISs) are widely used in healthcare settings to perform different tasks such as: patient order entry; management and dispensing; inventory and purchasing management; pricing; charging and billing; and medication administration and reporting (utilization, workload, and financial) (3). PISs are used not only to optimize the safety and efficiency of the medication use process (4), but also to connect the pharmacy department of the hospital to other hospital departments (5). Therefore, these systems can improve cost control, patient care quality, information security, information administration, and finally can lead to a decrease in medication errors (6-8).

Despite the advantages of these systems, various studies have shown that some of them could not meet the needs of their users (9-11). In addition, they may cause new kinds of problems that will probably increase error potential (12-14), which will lead consequently to patient harm or increased cost (15).

One of the factors causing inefficient systems is usability problems (16). The best-known definition of usability is by Nielsen: usability is about learnability; efficiency; memorability; errors; and satisfaction (10). Poor usability can decrease efficiency and effectiveness of the systems and of the satisfaction of users (10, 17). Systems with usability flaws have the potential to increase medication errors and can even lead to disaster (1, 2). Therefore, the effective usability evaluation of these systems is necessary (18).

There are a variety of usability evaluation methods (19). Heuristic evaluation is one of the most common methods for finding usability problems (20, 21). Applying this method, evaluators measure the usability, efficiency, and effectiveness of the interface on the basis of 10 usability heuristics (22). The low cost and low number of evaluators (three to 10) are counted as the benefits of the method. Without user involvement, usability evaluation method evaluates the system on the basis of principles that support good usability and identify a relatively high number of usability problems within a reasonable time (21, 23).

PIS has not yet been evaluated in Iran although it plays an important role in hospitals. Its usability has a potential impact on medication process. As a result, the present study evaluates PIS on the basis of heuristic evaluation, and reports a list of usability problems which can help designers to take the users’ needs into account for performing things faster and better in the design and development of PISs.

2. Methods

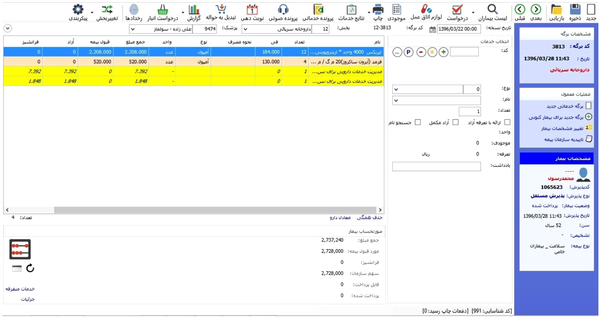

This study was conducted on the PIS, which is applied in the hospital information system (HIS) of more than 200 hospitals in Iran. This system started being implemented in hospitals from 1997. Routinely, about 2,000 daily active users interact with this system. Through the PIS, users send prescription medications. Technicians receive orders via these PISs and prepare them. An illustration of the PIS user interface is shown in Figure 1.

An illustration of the PIS user interface

We used a heuristic evaluation method, which is one of the most commonly-used usability evaluation methods, to evaluate the PIS. It is a discount usability inspection method for computer software which helps to identify usability problems in the user interface (UI) design based on Nielsen’s 10 usability heuristics (21) (Table 1).

Nielsen’s Usability Heuristics

| Usability Heuristic | Description |

|---|---|

| 1- Visibility of system status | The system should always keep users informed about what is going on through appropriate feedback given within reasonable time. |

| 2- Match between system and the real world | The system should speak the user’s language, with words, phrases, and concepts familiar to the user, rather than system-orientated terms. Follow real-world conventions, making information appear in a natural and logical order. |

| 3- User control and freedom | Users often choose system functions by mistake and will need a clearly marked “emergency exit” to leave the unwanted state without having to go through an extended dialog. Support undo and redo. |

| 4- Consistency and standards | Users do not have to wonder whether different words, situations, or actions mean the same thing. Follow platform conventions. |

| 5- Error prevention | Even better than good error messages is a careful design which prevents a problem from occurring in the first place. Either eliminate error-prone conditions or check for them and present users with a confirmation option before they commit to the action. |

| 6- Recognition rather than recall | Minimize the user’s memory load by making objects, actions, and options visible. The user should not have to remember information from one part of the dialog to another. Instructions for use of the system should be visible or easily retrievable whenever appropriate. |

| 7- Flexibility and efficiency of use | Accelerators - unseen by the novice user - may often speed up the interaction for the expert user such that the system can cater to both inexperienced and experienced users. Allow users to tailor frequent actions. |

| 8- Aesthetic and minimalist design | Dialogs should not contain information which is irrelevant or which is rarely needed. Every extra unit of information in a dialog competes with the relevant units of information and diminishes their relative visibility. |

| 9- Help users recognize, diagnose, and recover from errors | Error messages should be expressed in plain language (no codes), precisely indicate the problem, and constructively suggest a solution. |

| 10- Help and documentation | Even though it is better if the system can be used without documentation, it may be necessary to provide help and documentation. Any such information should be easy to search, be focused on the user’s task, list concrete steps to be carried out, and not be too large. |

The evaluation team was made up of 10 usability evaluators including a PhD in medical informatics, a PhD in health information management, a senior MSc in health information technology and seven senior MSc students in medical informatics. They all took theoretical and practical courses on usability engineering. Before evaluating the system, evaluators familiarized with the UI system. Then, individually, they investigated the UI system on the basis of heuristics and made a list of the usability problems. Having completed all evaluations, the evaluators reconsidered and aggregated their findings. The results of the evaluation indicated a set of system design violations (19). Finally, the list of usability problems was sent to all evaluators who quantified the severity of identified problems based on the following factors:

- Frequency: Is it common or rare?

- Impact: Will it be easy or difficult for the users to overcome?

- Persistence: Is it a one-time problem that users can overcome once they know about it or will the users repeatedly be annoyed by the problem? (24) (Table 2).

| Problem | Severity | Description |

|---|---|---|

| No problem | 0 | I don’t agree that this is a usability problem at all |

| Cosmetic | 1 | Need not be fixed unless extra time is available on project |

| Minor | 2 | Fixing this should be given low priority |

| Major | 3 | Important to fix, so should be given high priority |

| Catastrophe | 4 | Imperative to fix this before product can be released |

This study was reviewed and approved by the Urmia University of Medical Sciences research ethics committee, Urmia, Iran.

3. Results

Ten evaluators conducted the heuristic evaluation of the PIS. They identified 167 usability problems based on Nielsen’s 10 heuristics. The number of problems duplicated and, after we removed the duplicate problems, 125 unique problems remained. We analyzed the unique usability problems based on their severity and on violated heuristics.

The results indicate that, in terms of usability violations, two heuristics including “consistency and standards,” with 23 (18.40%), and “recognition rather than recall”, with 17 (13.60%), were the most frequently violated ones, whereas the heuristics including “error prevention,” with 9 (7.20%), and “help users recognize, diagnose, and recover from errors,” with 9 (7.20%), were identified as the least frequently violated heuristics (Table 3).

Identified Usability Problems per Violated Heuristics and Severity

| Violated Heuristic | Severity | A Total of Violations | Average Severity | ||||

|---|---|---|---|---|---|---|---|

| Cosmetic | Minor | Major | Catastrophe | Frequency | Percent | ||

| 1- Visibility of system status | 0 | 5 | 7 | 2 | 14 | 11.20 | 3 |

| 2- Match between system and the real world | 0 | 8 | 6 | 2 | 16 | 12.80 | 3 |

| 3- User control and freedom | 0 | 4 | 6 | 1 | 11 | 8.80 | 2 |

| 4- Consistency and standards | 2 | 7 | 8 | 6 | 23 | 18.40 | 4 |

| 5- Error prevention | 0 | 3 | 4 | 2 | 9 | 7.20 | 3 |

| 6- Recognition rather than recall | 1 | 4 | 7 | 5 | 17 | 13.60 | 3 |

| 7- Flexibility and efficiency of use | 0 | 7 | 5 | 3 | 15 | 12.00 | 3 |

| 8- Aesthetic and minimalist design | 2 | 3 | 6 | 0 | 11 | 8.80 | 2 |

| 9- Help users recognize, diagnose, and recover from errors | 0 | 3 | 5 | 1 | 9 | 7.20 | 3 |

| 10- Help and documentation | 0 | 0 | 0 | 0 | 0 | 0.00 | 4 |

| Total | 125 | 100 | |||||

| Frequency | 5 | 44 | 54 | 22 | |||

| Percent | 4.00 | 35.20 | 43.20 | 17.60 | |||

Among these 10 heuristics, the average severity of usability problems concerning seven heuristics, including “visibility of system status,” “match between system and the real world,” “consistency and standards,” “error prevention,” “recognition rather than recall”, “flexibility and efficiency of use,” “help users recognize, diagnose, and recover from errors,” and “help and documentation,” were major and catastrophic, while the average severity of problems related to other heuristics such as “User control and freedom” and “aesthetic and minimalist design” were minor.

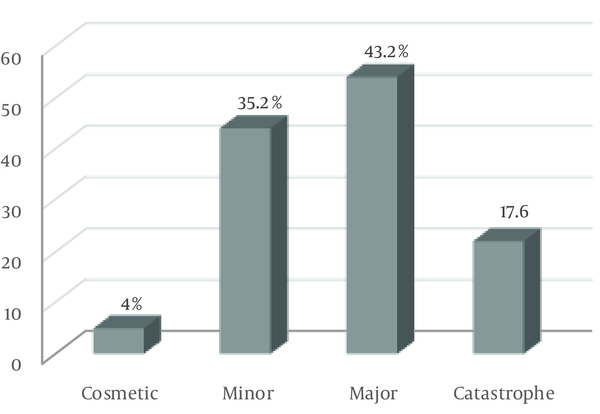

As a result, major violations with 54 identified usability problems were more common than minor violations, with 44 (35.20%), and catastrophic violations, with 22 (17.60%) identified usability problems. The least common violations were cosmetic violations, with 5 (4.00) usability violations. Finally, more than half of the problems (n = 76) were related to major and catastrophic violations (Figure 2).

Frequency of heuristic violations

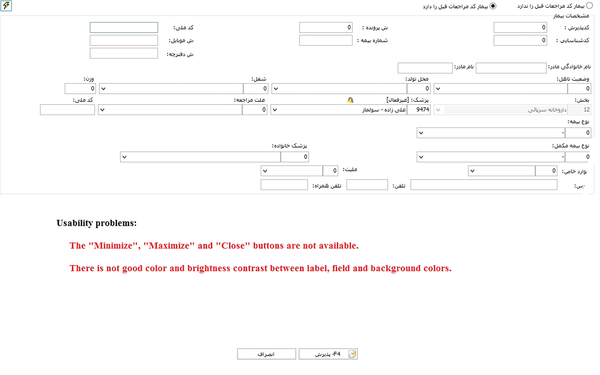

In addition, examples of the usability problems are categorized according to the 10 heuristics below:

3.1. Visibility of System Status

The user was not kept informed of the system’s progress when there were observable delays (greater than 15 second) in the system’s response time. Selected icon was invisible when surrounded by unselected icons. There was not a consistent icon design scheme and stylistic treatment across the system.

3.2. Match Between System and the Real World

When prompts implied a necessary action, the words in the message were not consistent with that action. Some terms, concepts, and icons used in the system were unclear and ambiguous. The system did not use the users’ background knowledge.

3.3. User Control and Freedom

There were not “undo” and “redo” functions. The users could not easily switch between overlapping windows. The “minimize,” “maximize”, and “close” buttons were not available.

3.4. Consistency and Standards

There were more than 20 icon types in the system. There was not an appropriate color spectrum in the system. Attention-getting techniques, such as intensity, size, font, and color were not used with care.

3.5. Error Prevention

The system did not prevent users from making errors. The system did not caution users if they were about to make a potentially serious error. Fields in data entry screens did not contain the appropriate default values.

3.6. Recognition Rather Than Recall

The optional data entry fields did not clearly mark. Text areas did not have “breathing space” around them in some windows. There were not salient visual cues to identify the active window.

3.7. Flexibility and Efficiency of Use

The system did not provide function keys for high-frequency commands. The system did not have the ability to support both novice and expert users.

3.8. Aesthetic and Minimalist Design

Some buttons are not organized neatly and orderly on screen. Text font was small. The system did not use a good color palette with a minimalist design.

3.9. Help Users Recognize, Diagnose, and Recover from Errors

The error messages did not inform the users of the error’s severity. The error messages did not suggest the cause of the problems. The error messages did not indicate what action the users needed to take to correct the error.

3.10. Help and Documentation

Did not provide in PIS.

Figure 3 below shows some examples of usability problems.

Examples of usability problems

4. Discussion

The present study evaluated PIS, which are widely used in Iranian hospitals, to identify the usability problems of PIS. The evaluator identified a high number of usability problems in RIS through the heuristic evaluation. More than half of these problems were major and catastrophic. In general, the heuristic “help and documentation” is not defined in PIS, and lack of this heuristic can confuse users. Our results indicated that the heuristics “consistency and standard” and “recognition rather than recall” have the largest number of violations, with the lowest number of violations related to “error prevention” and “Help users recognize, diagnose, and recover from errors” heuristics. These results are similar to the results of other studies, such as the those conducted by Khajouei et al., Atashi et al., and Nabovati et al. in Iran (25-27), and also the studies conducted by Choi and Bakken, Georgsson et al., Joshi et al., and Mirkovic et al. in countries other than Iran, which reported a high number of violations concerning these heuristics (28-31). On the other hand, the unexpected results of this study indicated that the severity of 60.8% of all violations is major and catastrophic, which accounts for more than half of the identified violations and, in this case, the result of our study is consistent with results of the studies by Ellsworth et al. and Okhovati et al. (32, 33). In spite of recent advances in healthcare information systems, designers may not employ all UI design standards.

These problems have the potential to put patients at risk. In addition, they can negatively affect effectiveness and efficiency of the systems, satisfaction of their users, and hospital’s financial issues. Inconsistent designs, the inflexibility of high-frequency commands, the impossibility to undo or redo actions, duplicate page titles, long registration forms, poor distinction between mandatory and optional data entry fields, and many others are cited as other sorts of usability problems as well. Designers, developers, and customers can use the results of such evaluation studies. Consequently, designers and developers have to consider these types of usability aspects in design and development phases in order to produce effective information systems. With the advantage of being aware of these problems, customers can select the system with a lower number of usability problems.

From the methodological point of view, we found that survey or distributed questionnaires among end-users are the most common method employed in usability evaluations of health-related information systems (33). Beyond the usefulness of surveys for gathering data in these kinds of studies, they do not allow evaluators to identify individual usability problems, so, in considering this limitation, we applied heuristic evaluation in our study by recruiting 10 external evaluators to detect individual usability problems that could be targeted for improvement of the implemented system. However, our study has some limitations. The first limitation encountered by the present study is that this method is used by usability evaluators without the involvement of real users. As a result, on the one hand, some of the problems may not annoy users in real working environments, and on the other hand, real users will probably identify some problems which have not been recognized by evaluators.

The other limitation was that it was better to use a pharmacist to comment on the expert opinion. Yet in this phase of study, we did not ask opinions of internal users regarding the system usability.

4.1. Conclusions

Nowadays, the healthcare industry is widely using information systems. One of the important features of these systems is their good usability. The weak usability of some systems can also cause some user-system interaction problems, which will lead to inefficiency of systems and dissatisfaction of users. Therefore, it is important that the UIs of these systems, which are used by a wide range of users, be evaluated in applying various usability evaluation methods. Based on the results of this study, the most important problem is that system designers neglect the existing standards and principles of system design. One of the usability problems is that the systems do not have any help, given that users do not have proper training to use the system, which puts the system at the disadvantage of real usability. It is recommended that the designers design systems on the basis of existing standards and principles. Every year the systems need to be evaluated and, based on the results, the systems must be updated.

Acknowledgements

References

-

1.

Lassetter JH, Warnick ML. Medical errors, drug-related problems, and medication errors: a literature review on quality of care and cost issues. J Nurs Care Qual. 2003;18(3):175-81. quiz 182-3. [PubMed ID: 12856901]. https://doi.org/10.1097/00001786-200307000-00003.

-

2.

O'Malley P. Order no harm: evidence-based methods to reduce prescribing errors for the clinical nurse specialist. Clin Nurse Spec. 2007;21(2):68-70. [PubMed ID: 17308440]. https://doi.org/10.1097/00002800-200703000-00004.

-

3.

Haux R. Health information systems - past, present, future. Int J Med Inform. 2006;75(3-4):268-81. [PubMed ID: 16169771]. https://doi.org/10.1016/j.ijmedinf.2005.08.002.

-

4.

Boggie DT, Howard JJ. Pharmacy Information Systems. Pharmacy Informatics. 2009. p. 93-105. https://doi.org/10.1201/9781420071764-c7.

-

5.

Pharmacists ASoH-S. ASHP Statement on the Pharmacist's Role in Informatics. Am J Health Syst Pharm. 2007;64(2):200-3. https://doi.org/10.2146/ajhp060364.

-

6.

Buntin MB, Burke MF, Hoaglin MC, Blumenthal D. The benefits of health information technology: a review of the recent literature shows predominantly positive results. Health Aff (Millwood). 2011;30(3):464-71. [PubMed ID: 21383365]. https://doi.org/10.1377/hlthaff.2011.0178.

-

7.

Donaldson MS, Corrigan JM, Kohn LT. To err is human: building a safer health system. NAP; 2000.

-

8.

Frisse ME, Johnson KB, Nian H, Davison CL, Gadd CS, Unertl KM, et al. The financial impact of health information exchange on emergency department care. J Am Med Inform Assoc. 2012;19(3):328-33. [PubMed ID: 22058169]. [PubMed Central ID: PMC3341788]. https://doi.org/10.1136/amiajnl-2011-000394.

-

9.

Khajouei R, Jaspers MW. The impact of CPOE medication systems' design aspects on usability, workflow and medication orders: a systematic review. Methods Inf Med. 2010;49(1):3-19. [PubMed ID: 19582333]. https://doi.org/10.3414/ME0630.

-

10.

Rahimi B, Safdari R, Jebraeily M. Development of hospital information systems: user participation and factors affecting it. Acta Inform Med. 2014;22(6):398-401. [PubMed ID: 25684849]. [PubMed Central ID: PMC4315630]. https://doi.org/10.5455/aim.2014.22.398-401.

-

11.

Rahimi B, Moberg A, Timpka T, Vimarlund V. Implementing an integrated computerized patient record system: Towards an evidence-based information system implementation practice in healthcare. AMIA Annu Symp Proc. 2008:616-20. [PubMed ID: 18999062]. [PubMed Central ID: PMC2655989].

-

12.

Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc. 2004;11(2):104-12. [PubMed ID: 14633936]. [PubMed Central ID: PMC353015]. https://doi.org/10.1197/jamia.M1471.

-

13.

Bloomrosen M, Starren J, Lorenzi NM, Ash JS, Patel VL, Shortliffe EH. Anticipating and addressing the unintended consequences of health IT and policy: a report from the AMIA 2009 Health Policy Meeting. J Am Med Inform Assoc. 2011;18(1):82-90. [PubMed ID: 21169620]. [PubMed Central ID: PMC3005876]. https://doi.org/10.1136/jamia.2010.007567.

-

14.

Kuziemsky CE. Review of Social and Organizational Issues in Health Information Technology. Healthc Inform Res. 2015;21(3):152-60. [PubMed ID: 26279951]. [PubMed Central ID: PMC4532839]. https://doi.org/10.4258/hir.2015.21.3.152.

-

15.

Coiera E, Ash J, Berg M. The Unintended Consequences of Health Information Technology Revisited. Yearb Med Inform. 2016;(1):163-9. [PubMed ID: 27830246]. [PubMed Central ID: PMC5171576]. https://doi.org/10.15265/IY-2016-014.

-

16.

Kushniruk AW, Triola MM, Borycki EM, Stein B, Kannry JL. Technology induced error and usability: the relationship between usability problems and prescription errors when using a handheld application. Int J Med Inform. 2005;74(7-8):519-26. [PubMed ID: 16043081]. https://doi.org/10.1016/j.ijmedinf.2005.01.003.

-

17.

Abran A, Khelifi A, Suryn W, Seffah A. Usability meanings and interpretations in ISO standards. Software Qual J. 2003;11(4):325-38. https://doi.org/10.1023/a:1025869312943.

-

18.

Kushniruk AW, Patel VL. Cognitive and usability engineering methods for the evaluation of clinical information systems. J Biomed Inform. 2004;37(1):56-76. [PubMed ID: 15016386]. https://doi.org/10.1016/j.jbi.2004.01.003.

-

19.

Nielsen J, editor. Usability inspection methods. Conference companion on Human factors in computing systems. ACM; 1994.

-

20.

Jeffries R, Desurvire H. Usability testing vs. heuristic evaluation. ACM SIGCHI Bull. 1992;24(4):39-41. https://doi.org/10.1145/142167.142179.

-

21.

Jeffries R, Miller JR, Wharton C, Uyeda K. User interface evaluation in the real world: a comparison of four techniques. CHI '91 Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. New Orleans, Louisiana, USA. 1991. p. 119-24.

-

22.

Nielsen J. Ten usability heuristics. 2005, [cited 19 Dec]. Available from: http://www.nngroup.com/articles/ten-usability-heuristics/.

-

23.

Nielsen J, Landauer TK. A mathematical model of the finding of usability problems. Proceedings of the INTERACT'93 and CHI'93 conference on Human factors in computing systems. 1993. p. 206-13.

-

24.

Nielsen J. Severity ratings for usability problems. Papers and Essays. 1995;54.

-

25.

Khajouei R, Zahiri Esfahani M, Jahani Y. Comparison of heuristic and cognitive walkthrough usability evaluation methods for evaluating health information systems. J Am Med Inform Assoc. 2017;24(e1):e55-60. [PubMed ID: 27497799]. https://doi.org/10.1093/jamia/ocw100.

-

26.

Atashi A, Khajouei R, Azizi A, Dadashi A. User Interface Problems of a Nationwide Inpatient Information System: A Heuristic Evaluation. Appl Clin Inform. 2016;7(1):89-100. [PubMed ID: 27081409]. [PubMed Central ID: PMC4817337]. https://doi.org/10.4338/ACI-2015-07-RA-0086.

-

27.

Nabovati E, Vakili-Arki H, Eslami S, Khajouei R. Usability evaluation of Laboratory and Radiology Information Systems integrated into a hospital information system. J Med Syst. 2014;38(4):35. [PubMed ID: 24682671]. https://doi.org/10.1007/s10916-014-0035-z.

-

28.

Choi J, Bakken S. Web-based education for low-literate parents in Neonatal Intensive Care Unit: development of a website and heuristic evaluation and usability testing. Int J Med Inform. 2010;79(8):565-75. [PubMed ID: 20617546]. [PubMed Central ID: PMC2956000]. https://doi.org/10.1016/j.ijmedinf.2010.05.001.

-

29.

Georgsson M, Staggers N, Weir C. A Modified User-Oriented Heuristic Evaluation of a Mobile Health System for Diabetes Self-management Support. Comput Inform Nurs. 2016;34(2):77-84. [PubMed ID: 26657618]. [PubMed Central ID: PMC4743707]. https://doi.org/10.1097/CIN.0000000000000209.

-

30.

Joshi A, Arora M, Dai L, Price K, Vizer L, Sears A. Usability of a patient education and motivation tool using heuristic evaluation. J Med Internet Res. 2009;11(4). e47. [PubMed ID: 19897458]. [PubMed Central ID: PMC2802560]. https://doi.org/10.2196/jmir.1244.

-

31.

Mirkovic J, Kaufman DR, Ruland CM. Supporting cancer patients in illness management: usability evaluation of a mobile app. JMIR Mhealth Uhealth. 2014;2(3). e33. [PubMed ID: 25119490]. [PubMed Central ID: PMC4147703]. https://doi.org/10.2196/mhealth.3359.

-

32.

Okhovati M, Karami F, Khajouei R. Exploring the usability of the central library websites of medical sciences universities. J Librariansh Inf Sci. 2016;49(3):246-55. https://doi.org/10.1177/0961000616650932.

-

33.

Ellsworth MA, Dziadzko M, O'Horo JC, Farrell AM, Zhang J, Herasevich V. An appraisal of published usability evaluations of electronic health records via systematic review. J Am Med Inform Assoc. 2017;24(1):218-26. [PubMed ID: 27107451]. https://doi.org/10.1093/jamia/ocw046.